A Go Project: Load Balancer, TCP Proxy, mTLS, and Stress Testing for Fun

Youcef GuichiJuly 05, 2025

Youcef GuichiJuly 05, 2025

This project isn’t meant to be production-ready — it’s a playground to explore core infrastructure concepts through code.

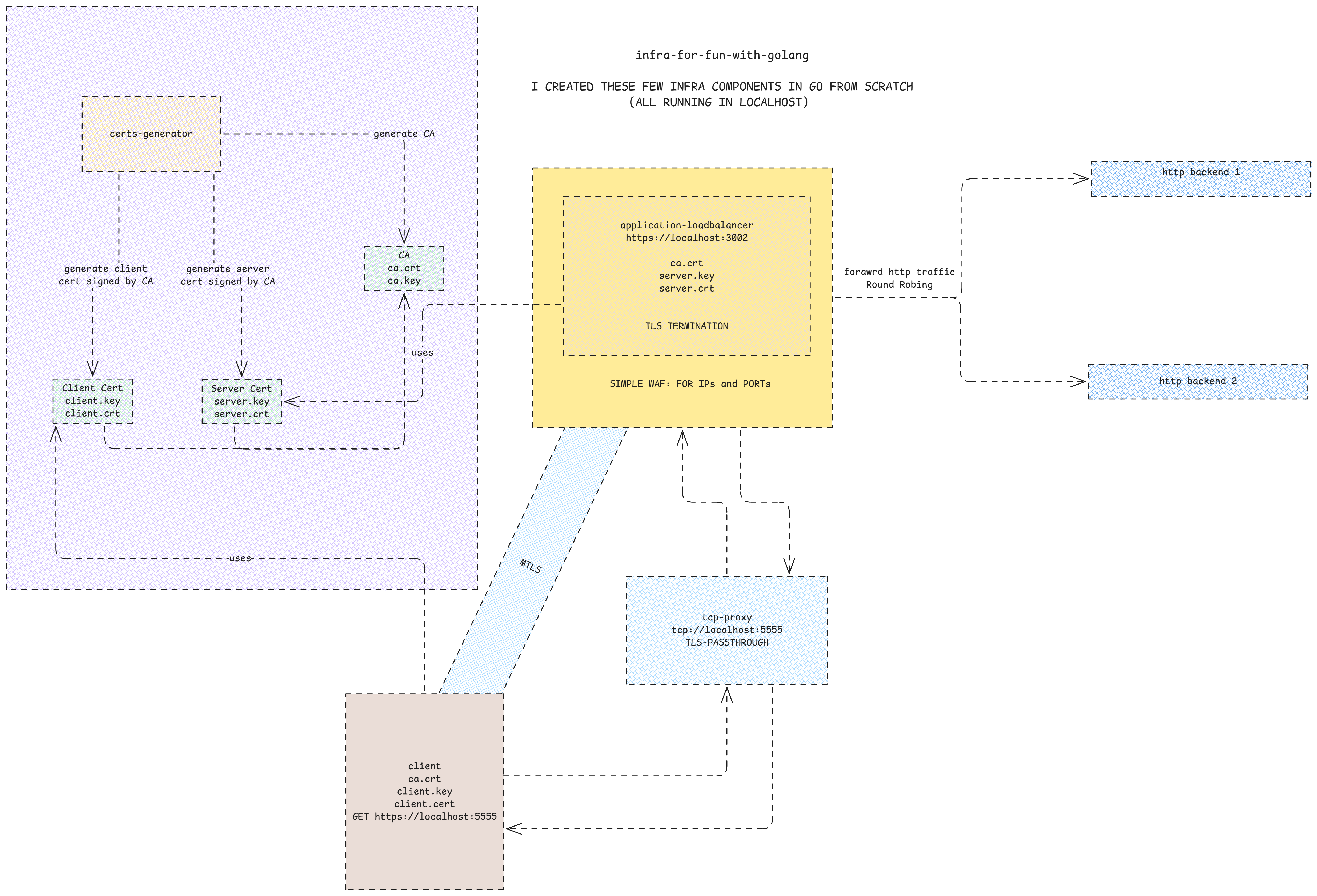

Overview: Infrastructure for Fun with Golang

The project includes several services and tools — all written from scratch in Go — to simulate real-world infrastructure pieces:

What you can learn from this project

- TLS termination and mutual TLS (mTLS) enforcement

- Building simple infrastructure tools in Go (app load balancer, tcp proxy, mtls ...)

- Differentiating network-layer vs application-layer proxying

- Writing a basic WAF and applying IP/port filtering

- Managing certificates with a self-created CA

- How to do stress test

Current Components

Application Load Balancer

Supports round-robin request distribution

Terminates HTTPS connections

Enforces mutual TLS (mTLS)

Includes a basic Web Application Firewall (WAF) using IP and port allowlists

TCP Proxy

Forwards raw bytes without application-layer logic

Useful for comparing network-layer proxying vs TLS-terminating proxies

mTLS-Enabled Client

The client is provided by the certs to present it to the server. to ensure teh mtls flow and that the client is trusted.

Certificate Generator

Creates a local Certificate Authority (CA)

Issues certificates for both clients and servers

How to Run the Stack

This project is configured to run using make commands for simplicity. Here’s the step-by-step process:

Generate a Local CA

make generate_caThis command creates a local CA that you'll use to sign client and server certificates.Generate Certificates Generate self-signed certs (signed by the local CA) for the server and client:

make generate_self_signed_cert for=server crt=server.crt key=server.key make generate_self_signed_cert for=client crt=client.crt key=client.keyThese will be used for mTLS authentication.Run the Application Load Balancer

make run_application_load_balancerThe load balancer loads its config fromapp_loadbalancer_config.yaml. Here's a sample:

host: localhost:3002

protocol: https

port: 3002

ca_location: certs/ca.crt

cert_location: certs/server.crt

key_location: certs/server.key

backend_servers:

- http://localhost:8080

- http://localhost:8081

waf:

allowedIPs:

- 10.0.0.0/8

- 192.168.1.0/24

- 172.16.0.0/12

- 127.0.0.1

allowedPorts:

- 80

- 443

- "*"Yes, it even has a mini WAF!

Run the Backend Servers These servers will receive traffic from the load balancer

make run_backend_servers, The load balancer terminates TLS and forwards the request to these local backends.Run the TCP Proxy This is a very simple network-level proxy that forwards raw bytes and doesn’t parse or inspect HTTP/TLS

make run_tcp_proxy

The traffic now flows like this:

client → TCP Proxy → Application Load Balancer → Backend Servers

- Run the Client

Finally, the mTLS-enabled client connects to the load balancer via the tcp proxy. The load balancer will enforce certificate validation

make run_client

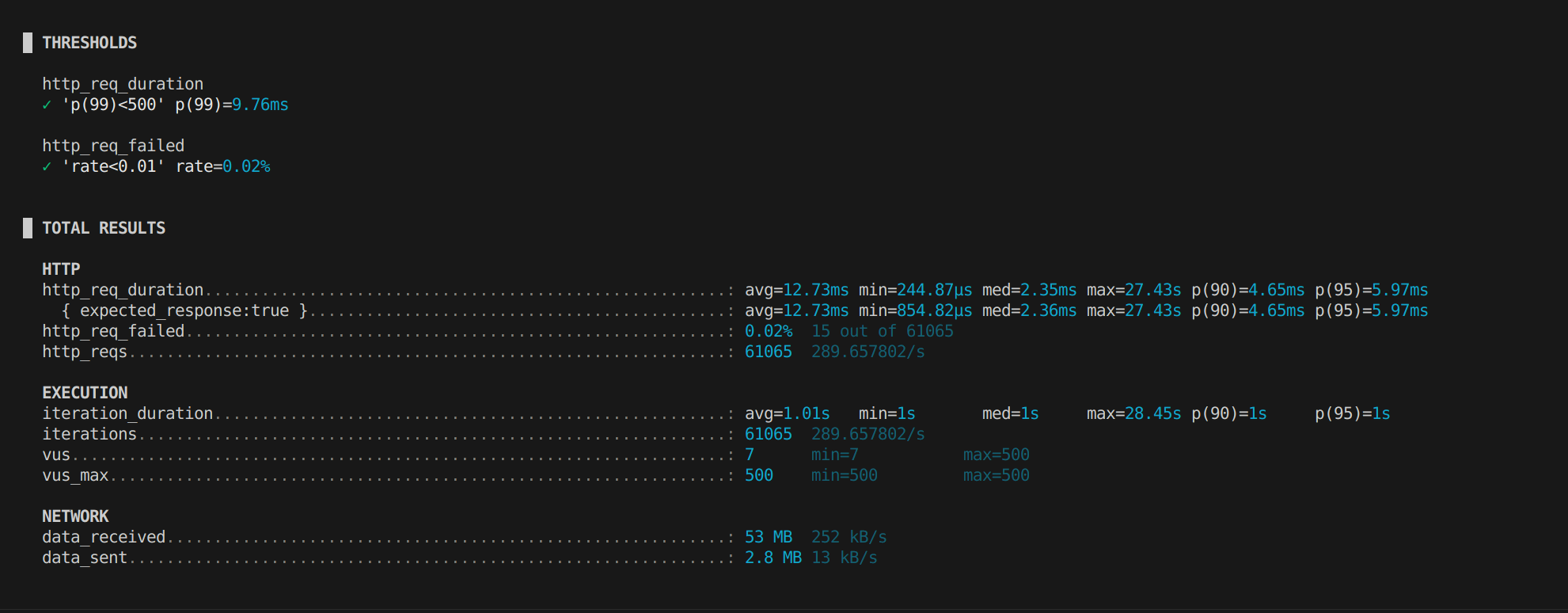

Stress Testing the Load Balancer

Once everything was in place, I ran a stress test to see how the application load balancer performs under pressure.

I used k6 by Grafana — a modern performance testing tool I discovered through Dániel Mohácsi’s talk at KCD Budapest: "Beyond Functionality: Performance Testing with Grafana k6."

Test Configuration

500 concurrent virtual users

61,065 total requests

Results Summary

99% of requests completed under 9.76ms

Average latency: 12.73ms

Failure rate: 0.02% (15 failed requests)

Longest request: 27.43 seconds

Despite the low failure rate and fast response times for most requests, a few outliers took significantly longer than expected. That’s something I’ll be debugging next.

I also encountered this curious error during the test:

stream error: stream id 203; INTERNAL_ERROR; received from peer

This occurred under high load

Next Steps

Investigate the outliers and 27-second response time

Debug the INTERNAL_ERROR under HTTP load

Improve load balancer error handling and timeout policies

Final Thoughts

This project has been a rewarding way to explore core infrastructure principles while writing real code. If you're interested in systems programming, networking, or just enjoy breaking your own tools under load — building something like this from scratch is well worth it.

And if you’re testing performance, definitely give k6 a try. It’s simple, scriptable, and integrates well with CI/CD or developer workflows.